Supercomputer

"High-performance computing" redirects here. For narrower definitions of HPC, see high-throughput computing and many-task computing. For other uses, see Supercomputer (disambiguation).

A supercomputer is a computer at the frontline of current processing capacity, particularly speed of calculation. Supercomputers were introduced in the 1960s and were designed primarily by Seymour Cray at Control Data Corporation (CDC), and later at Cray Research. While the supercomputers of the 1970s used only a few processors, in the 1990s, machines with thousands of processors began to appear and by the end of the 20th century, massively parallel supercomputers with tens of thousands of "off-the-shelf" processors were the norm.[2][3]

Systems with a massive number of processors generally take one of two paths: in one approach, e.g. in grid computing the processing power of a large number of computers in distributed, diverse administrative domains, is opportunistically used whenever a computer is available.[4] In another approach, a large number of processors are used in close proximity to each other, e.g. in a computer cluster. The use of multi-core processors combined with centralization is an emerging direction.[5][6] Currently, IBM Sequoia is the fastest in the world.[7]

Supercomputers are used for highly calculation-intensive tasks such as problems including quantum physics, weather forecasting, climate research, oil and gas exploration, molecular modeling (computing the structures and properties of chemical compounds, biologicalmacromolecules, polymers, and crystals), and physical simulations (such as simulation of airplanes in wind tunnels, simulation of the detonation of nuclear weapons, and research into nuclear fusion).

History

The history of supercomputing goes back to the 1960s when a series of computers at Control Data Corporation (CDC) were designed by Seymour Cray to use innovative designs and parallelism to achieve superior computational peak performance.[8] The CDC 6600, released in 1964, is generally considered the first supercomputer.[9][10]

Cray left CDC in 1972 to form his own company.[11] Four years after leaving CDC, Cray delivered the 80 MHz Cray 1 in 1976, and it became one of the most successful supercomputers in history.[12][13] The Cray-2 released in 1985 was an 8 processor liquid cooled computer and Fluorinert was pumped through it as it operated. It performed at 1.9 gigaflops and was the world's fastest until 1990.[14]

While the supercomputers of the 1980s used only a few processors, in the 1990s, machines with thousands of processors began to appear both in the United States and in Japan, setting new computational performance records. Fujitsu's Numerical Wind Tunnel supercomputer used 166 vector processors to gain the top spot in 1994 with a peak speed of 1.7 gigaflops per processor.[15][16] The Hitachi SR2201 obtained a peak performance of 600 gigaflops in 1996 by using 2048 processors connected via a fast three dimensional crossbar network.[17][18][19] The Intel Paragon could have 1000 to 4000 Intel i860 processors in various configurations, and was ranked the fastest in the world in 1993. The Paragon was a MIMD machine which connected processors via a high speed two dimensional mesh, allowing processes to execute on separate nodes; communicating via the Message Passing Interface.[20]

Hardware and architecture

Approaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance.[8] However, in time the demand for increased computational power ushered in the age of massively parallel systems.

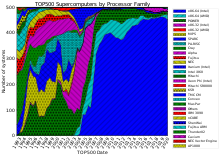

While the supercomputers of the 1970s used only a few processors, in the 1990s, machines with thousands of processors began to appear and by the end of the 20th century, massively parallel supercomputers with tens of thousands of "off-the-shelf" processors were the norm. Supercomputers of the 21st century can use over 100,000 processors (some being graphic units) connected by fast connections.[2][3]

Throughout the decades, the management of heat density has remained a key issue for most centralized supercomputers.[21][22][23] The large amount of heat generated by a system may also have other effects, e.g. reducing the lifetime of other system components.[24] There have been diverse approaches to heat management, from pumpingFluorinert through the system, to a hybrid liquid-air cooling system or air cooling with normal air conditioning temperatures.[14][25]

Systems with a massive number of processors generally take one of two paths: in one approach, e.g. in grid computing the processing power of a large number of computers in distributed, diverse administrative domains, is opportunistically used whenever a computer is available.[4] In another approach, a large number of processors are used in close proximity to each other, e.g. in a computer cluster. In such a centralized massively parallel system the speed and flexibility of the interconnect becomes very important and modern supercomputers have used various approaches ranging from enhanced Infinibandsystems to three-dimensional torus interconnects.[26][27] The use of multi-core processors combined with centralization is an emerging direction, e.g. as in the Cyclops64 system.[5][6]

As the price/performance of general purpose graphic processors (GPGPUs) has improved, a number of petaflop supercomputers such as Tianhe-I andNebulae have started to rely on them.[28] However, other systems such as the K computer continue to use conventional processors such as SPARC-based designs and the overall applicability of GPGPUs in general purpose high performance computing applications has been the subject of debate, in that while a GPGPU maybe tuned to score well on specific benchmarks its overall applicability to everyday algorithms may be limited unless significant effort is spent to tune the application towards it.[29] However, GPUs are gaining ground and in 2012 the Jaguar supercomputer was transformed into Titan by replacing CPUs with GPUs.[30][31][32]

A number of "special-purpose" systems have been designed, dedicated to a single problem. This allows the use of specially programmed FPGA chips or even custom VLSI chips, allowing higher price/performance ratios by sacrificing generality. Examples of special-purpose supercomputers include Belle,[33] Deep Blue,[34] and Hydra,[35] for playing chess, Gravity Pipe for astrophysics,[36]MDGRAPE-3 for protein structure computation molecular dynamics[37] and Deep Crack,[38] for breaking the DES cipher.

Very nice posting. Your article us quite informative. Thanks for the same. Our service also helps you to market your products with various marketing strategies, right from emails to social media. Whether you seek to increase ROI or drive higher efficiencies at lower costs, Pegasi Media Group is your committed partner will provide b2bleads.

ReplyDeleteIBM Cyclops64 Users